Published on 06 June 2024

To state the obvious, data are among the most valuable assets your organization has. Are you managing them as such? Data management is essential and enables organizations to fully benefit from the value of their data, even beyond the compliance aspect to build trust and improve decision-making.

Since business processes are increasingly data-driven and automated, a lack of transparency in the quality of data represents a risk. Regulators have been directing their attention to data management, leading to the creation of new regulations, which require financial institutions to improve their practices and standards in the field.

Since regulations greatly affect the processing of data, the following article will present an overview of these regulations and explain how to transform these new challenges into opportunities. In this article, we are going to focus on BCBS 239 principles, giving insights beyond the scope of the regulation, and sharing experiences and challenges on how best to implement these principles.

What are BCBS 239 principles?

Following the global financial crisis of 2008, banking regulators questioned whether financial institutions were able to aggregate risk exposures and identify concentrations quickly and effectively. As stated by the Basel Committee, one of the lessons learned from the crisis is that “banks’ IT and data architectures were inadequate to support the broad management of financial risks.”

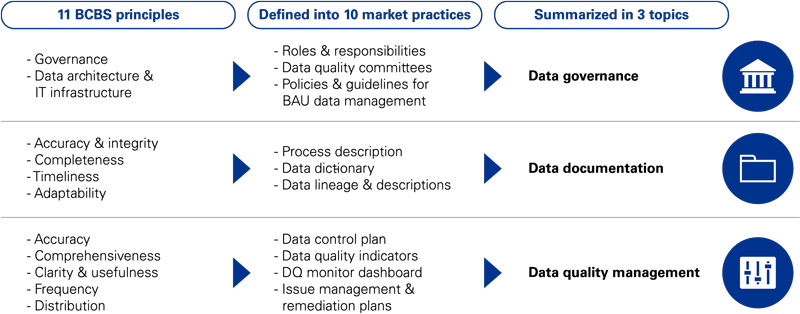

In response to this argument, regulators included stronger data aggregation requirements as part of the Pillar 2 guidance. These 11 principles are standard practices divided in three categories: data governance, data documentation and data quality management.

Refer to Principles for effective risk data aggregation and risk reporting for more details.

How BCBS 239 created a momentum for data management

The BCBS 239 principles were initially aimed at Global Systemically Important Banks (G-SIBs). Yet, the principles have become a standard across the banking industry, as local supervisors follow the BCBS recommendations and apply the principles to Domestic Systemically Important Banks (D-SIBs). This however doesn’t mean that only these banks are impacted. We see a change in regulatory thinking and a clear push towards better data management beyond the BCBS 239 principles, thereby affecting all financial institutions. We also see more and more organizations (not only banks) eager to improve their data management and encourage an appreciation for the importance of data among their teams.

The BCBS 239 principles have inspired different local initiatives and regulations that aim to improve data management and to broaden the target audience and scope (e.g. NBB circular 2017_27, data management streams as part of on-site inspections). The topic of data is now almost systematically included in new regulations and inspections, reflecting its importance to the regulator.

What is the rate of compliance with the BCBS 239 principles?

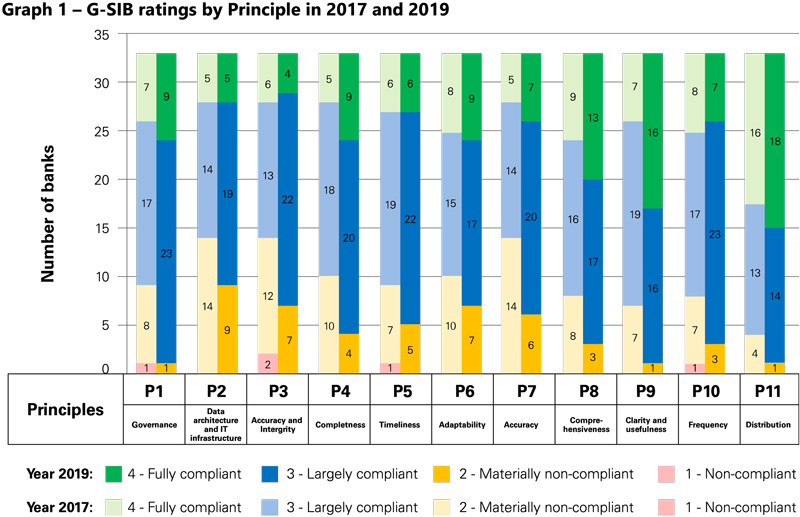

According to a 2020 analysis by the Basel Committee of Banking Supervision, the current compliance of G-SIBs with BCBS 239 principles is illustrated in the graph below:

Basel Committee on Banking Supervision. (2020, April). Graph 1 – G-SIB ratings by Principle in 2017 and 2019 [Graph]. Progress in adopting the Principles for effective risk data aggregation and risk reporting. https://www.bis.org/bcbs/publ/d501.pdf

Few surveyed institutions are fully compliant with all principles, and some are even materially non-compliant. However, there has been a positive evolution towards full compliance over the last two years. Regulators understand that compliance takes time and showing that steps are being taken (as part of a clear roadmap) to improve the situation is usually well perceived. However, banks that have significant delays, compared to their peers, are more likely to face fines and/or increased capital requirements, as well as additional scrutiny from regulators by way of, for example, on-site inspections.

The principles on which institutions scored most poorly were:

Refer to Principles for effective risk data aggregation and risk reporting for more details.

Principle 2 - Data architecture and IT architecture

Some key issues regarding this principle identified by the ECB via its thematic review in 2018 were: a lack of integrated solutions for data aggregation and reporting, a lack of a homogenous data taxonomy, a lack of complete and automated consistency checks and no clear escalation process. In addition to these issues, the ECB highlighted some best practices, including the use of common data sources for the production of all reporting, the automation and documentation of controls, a constant search for data quality improvement across the whole organization and a clear escalation process, involving all levels of management.

Principle 3 - Accuracy and Integrity

The problematic issues were mainly incomplete and non-formally approved data quality policies, incomplete key quality indicators, untraced and undocumented changes, missing reconciliation procedures, as well as inexistant audit trails. Best practices identified by the ECB included group-wide data quality standards and policies reviewed on a regular basis, key quality indicators for both internal and external data, a data quality certification process for the golden sources, as well as clear updating of the traceability and documentation when changes are made.

Principle 6 - Adaptability

Areas of concern included the limited ability to deploy local risk data obtained from subsidiaries, a lack of accuracy and completeness of granular risk data and an overreliance on unstructured data sources. The ECB’s best practices suggestions included data customization capabilities and flexible data aggregation processes.

Which challenges do financial institutions encounter along their journey?

Implementing data management principles requires specific adjustments. The kinds of challenges usually depend on the specificities of the firm. Below are several insights on how to tackle some of them:

Project-related challenges

It is important to avoid going too big too fast or too big too long. Instead, it is better to implement new standards progressively, starting with a limited scope of key data elements (typically, organizations start with Finance or Risk data elements - which are used in key reports - such as LCR , CET1, LR and PD). When prioritizing and scoping, it is essential to consider upstream and downstream dependencies of key data elements considered for the project, since it is expected standards get applied to all these dependencies. Clear selection criteria should be defined in order to avoid subjective selection based on data owners’ preferences. Selection criteria can be, for example, the degree of importance for the regulator, the degree of importance for internal decision-making bodies or business processes, the possibility of reputational damage/erroneous investment or commercial decisions/financial losses, etc.

Evolving requirements from the business, as the project develops and becomes more concrete (e.g. additional information to complete the business glossary), may decrease the efficiency of the exercise at some point. It is best to take the time at the beginning of the project to discuss expected deliverables with the key stakeholders, and to demonstrate what the solution will look like. Performing the implementation in phases with a pilot data domain is a good test and lessens the impact of any potential changes. The requirements coming from new regulations (e.g. different data frequencies) also place obstacles along the data journey and may require the allocation of key resources.

Limited resources force choices and prioritization. Highlighting a few use cases that bring added value can help build support from the business and progressively show the project’s effectiveness to management. The multiple objectives, both medium and long term, might require starting a global program/project backed by appropriate (management and experts) support. However, finding qualified personnel is not easy due to the shortage of data management experts.

Data transformation related challenges

Internal alignment on business concepts and data elements definitions (i.e. getting the whole company to speak the same language across divisions and teams), can take a lot of time to achieve. While harmonizing as much as possible should be the goal, it is important to understand some business concepts/terms can mean different things to different departments. One solution is to introduce the notion of “contexts”, i.e. allowing for different definitions in function of the use of the concept. For example, ratings are commonly used in the banking industry for models or regulatory reporting. However, individual teams might use external or internal ratings and use them differently, which changes the sense of the term. Another example of a notion challenging organizations is that of “balances.” Multiple types of balances might be created by various teams and, depending on the scope, have different meanings. When these contexts are clarified and shared, it becomes easier to identify a data owner within the team and creates better understanding and a reinforced collaboration.

Collaboration between IT departments and the business teams, as well as between the different business lines, can be difficult in siloed organizations, especially when business lines have different maturity levels of data management. It is important to have an overarching strategy in place, as well as appropriate data bodies such as a data office that coordinates data activities, and a change management team that ensures the embedding of the data mindset and potential new tools in work routines. To ensure good collaboration, regular alignment with all key stakeholders is necessary, as well as the support and sponsoring of executive members.

The implementation of a new data culture in the organization, including the governance and maintenance of all deliverables, is necessary to have a lasting impact and avoid wasteful efforts on a one-off exercise. It requires convincing and getting people on board. In order to do so, it is important to involve them from the beginning of the project and to communicate regularly. Proper communication on the project is important to avoid creating false expectations, fears and rumors. Measuring the progress and the benefits that the project provides, as well as communicating them, is a key element in its success. It is recommended to present the big picture, highlighting the short-term and long-term added value it will bring to the whole organization, rather than only focus on compliance aspects. Finally, defining clear roles and responsibilities and training people appropriately are essential.

A complex IT environment can be a real challenge when implementing data management. Most data management tools only support a limited number of technologies, and environments. These include a large number of applications and databases, making it more difficult to apply data management standards. It is also often mistakenly thought that technology will solve all problems. Sometimes a change of processes and culture should be applied, as well, in order for the data transformation to work.

Regulatory challenges

Implementing regulations, in particular when data and data management are impacted, is always a challenge because they leave room for interpretation, making it difficult for organizations to evaluate whether they correctly understood the expectations of the regulator. A good way to solve this is to reference public results of previous reviews performed by the regulator (such as the Thematic Review on effective risk data aggregation performed by the ECB), and to use the expertise of companies able to benchmark against more mature financial institutions.

Regulatory deadlines sometimes require prioritization (e.g. focus efforts on key data domains such as Risk & Finance data). Defining a clear and realistic roadmap will help to convince the regulator that the financial institution is progressing towards compliance.

How to ensure the maintainability of data management?

To enforce data management policies and maintain long-term compliance with standards, technology is recommended because it can significantly reduce manual work and operational risks by automating tasks. There are multiple features available on the market, some must-haves and some nice-to-haves, depending on the needs of the company. Among them are:

- Automated extraction/discovery of metadata from data sources to fill in a data catalogue and data dictionary. Tools have scanners connecting to very diverse technologies, such as databases, ETL and scripts. Metadata from these technologies are extracted, interpreted and loaded into a data dictionary or a data catalogue. Changes are traced and reflected automatically.

- Automated building of data lineage. Based on extracts of metadata, tools connect data elements with each other, as well as with business concepts, showing the end-to-end journey of data. They can also document transformations based on automated ETL flows analysis.

- Automated classification of data. Tools can classify data on a large scale, allowing for the identification of personal or critical data that must be protected. They can also create intelligent groups/clusters useful for sorting/browsing through data in the data catalogue.

- Automated notifications to data roles. Tools enable the reception of customized notifications and an overview of actions that data roles must take on their data (e.g. review of changes in the documentation or approve a new usage of the data). This makes it possible to keep up with all changes.

- Automated reporting on data management KPI. Tools make it possible to define and measure KPIs (e.g. number of data elements validated), which allows for automated reporting for management.

- Automated audit trail and history. Tools can keep track of all changes performed, increasing trust in the reports and streamlining corrections and comparisons over time.

- Automated measurement/monitoring of data quality. Tools make it possible to measure data quality on a regular basis and on a large scale. A problem is often the configuration of the data quality monitoring; however, modern tools include the automated suggestion of data quality rules via AI.

- Automated detection of anomalies via data profiling. Tools exist that can analyze data and detect abnormal patterns.

- Automated data cleansing. Tools can be used to apply large-scale corrections/cleaning strategies on data.

- Self-service: Tools empower data users by giving them all they need to work autonomously without the need to regularly contact data stewards and data owners.

The choice of tool can differ from one institution to another, depending on the size and complexity of the organization and its IT landscape. There are many different tools on the market; some are more advanced (and costly) than others. An analysis of the institution’s requirements should be performed to determine the benefits of using a specific tool. Once the tool is selected, proper implementation will ensure its use in the organization’s daily operations.

How KPMG can help

Leveraging our experience and track record across many industries, KPMG can assist you in overcoming the above challenges throughout your data transformation. Thanks to our multidisciplinary approach (combining data experts with sector knowledge), we adapt our proven methods and tools to specifically help you comply and reap the benefits of trustworthy data assets.

In the context of such a regulatory-driven data transformation, we can assist you by:

- Assessing your organization against regulatory requirements

- Designing your Data Strategy and guiding the implementation of your transformation plan

- Guiding you through the identification and prioritization of remediation actions (on data governance, metadata management, data quality, data lineage, etc.)

- Evaluating the best tools to support your progression and assist in their integration